February 28, 2025

What is Latency? Types, Impact and Ways to Reduce It

In our fast-paced digital age, speed has become a non-negotiable expectation. What was once acceptable, such as waiting hours to download a movie or even days for a mobile internet bundle to be activated, is now unthinkable. Today, users demand almost immediate loading times, and even a few seconds of delay on a website can lead to frustration.

Whether you're streaming content on a streaming platform, engaging in online gaming, shopping online, or interacting with a cloud-based app, the immediacy of these experiences is crucial. Central to this swift responsiveness is network latency, the often-irritating lag between a user's action and the network's reaction.

In Bangladesh, where digital services are expanding rapidly, the focus on reducing network latency is essential to meet user expectations and keep pace with global standards.

In this article, we’ll dive into the concept of latency, examining its various forms, the underlying causes, ways to measure it, and approaches to minimize it. We aim to clarify these aspects and offer valuable insights.

What is Latency?

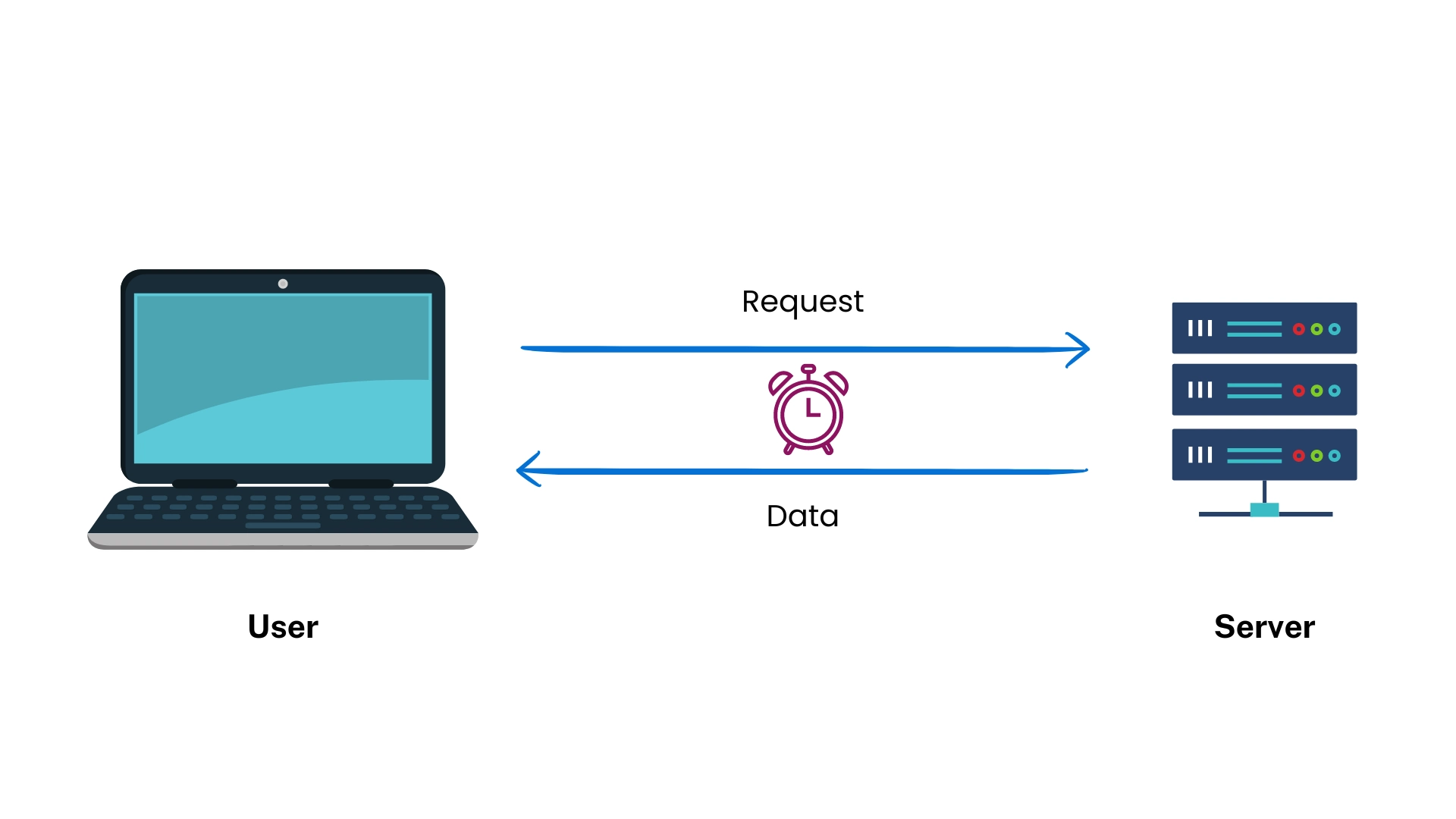

Latency is the time it takes for something to happen after you do something online. For example, when you click to open a website or start a video, latency is the delay between your click and when the website or video actually shows up.

If the latency is low, things happen quickly, but if it's high, you’ll notice a delay, which can slow down activities like streaming or playing games online.

Picture this– you turn on Netflix and pick a movie to watch. But even after clicking play, the screen keeps loading, and the movie takes forever to start. Seconds pass, and you get more and more frustrated as the video just won’t play smoothly, ruining the experience you were looking forward to.

Factors like distance, hardware quality, network traffic, and software efficiency affect latency. To reduce it, you might need to upgrade hardware, improve network setups, and optimize code. Managing latency is crucial for better system performance and user experience.

Types of latency

Latency types refer to different forms of delays that occur in various systems, particularly in computing and networking. Here’s a concise overview of the key latency types:

Network Latency: Network latency is the time it takes for data to travel across a network. It’s influenced by things like the distance data has to travel, how long it takes to send the data, the time needed to process it, and any delays while the data waits in line. Latency can impact things like browsing the web, making video calls, and playing online games.

Disk Latency: Disk latency is the time it takes to access data from storage devices like hard drives (HDDs) or solid-state drives (SSDs). It includes the time needed to find the right spot on the disk, wait for the disk to spin to the right place, and actually move the data. This affects how quickly files open or data is retrieved.

Memory Latency: Memory latency is the delay between when the CPU asks for data from RAM and when it actually gets it. CAS latency, an important factor in this process, affects how quickly programs can access and use stored information.

Processing Latency: Processing latency is the time it takes for a system to handle an input and produce an output. This includes delays in how long it takes to execute instructions and how efficiently the system can manage multiple tasks at once. These delays affect things like rendering images and processing data.

Input Latency: Input latency is the time between when you do something (like press a key) and when the system responds. It’s especially important for activities that need quick reactions, such as gaming and interactive apps.

Application Latency: Application latency is the delay inside an app, often because the app is processing or waiting for replies from other services. This affects how quickly the app reacts to user actions, like loading a webpage or saving a file.

What are the Factors that Influence Latency?

Latency can be influenced by a variety of factors related to distance, hardware, and software. Here's a detailed breakdown:

Distance

Physical Distance:

Propagation Delay: The speed at which data moves through different types of connections affects latency. For example, in fiber optic cables, light travels very fast—around 200,000 kilometers per second. But it still takes time to cover long distances. In satellite communication, signals can take a few hundred milliseconds to travel to space and back, which causes delays.

Geographic Separation: The bigger the physical distance between the client and server, the higher the latency due to the increased time required for data to travel.

Network Path:

Indirect Routing: Data may take a longer, less direct route due to suboptimal routing or network topology. This can add to latency, especially if the path involves multiple hops through various network nodes.

Hardware Issues

Server Performance:

Load and Processing Power: Servers with high CPU or memory utilization can experience delays in processing requests, increasing latency.

Disk I/O Speed: Slow disk read/write speeds can affect how quickly data can be accessed or stored, contributing to latency.

Network Devices:

Router and Switch Performance: Routers and switches that are overloaded or not optimized can cause delays. Inefficient handling of packet routing and switching can increase latency.

Network Interface Cards (NICs): The performance of network interface cards in both servers and clients can impact latency. Older or lower-quality NICs may introduce additional delays.

Related Blog: How to Get the Best Wi-Fi Connection from Your Router?

Client Hardware:

Device Specifications: Older or less powerful devices (e.g., computers or smartphones) might process data more slowly, leading to higher latency.

Network Hardware: Poorly performing or outdated routers and modems on the client side can also affect latency.

Software Issues

Network Protocols:

Protocol Overhead: Different communication protocols add different amounts of extra data, which can impact latency. For instance, TCP (Transmission Control Protocol) has features that check for errors and resend any lost data, which can slow things down. In contrast, UDP (User Datagram Protocol) is simpler and doesn’t include these checks, allowing it to send data more quickly with less delay.

Protocol Implementation: Inefficient or old versions of communication protocols can cause extra delays. For example, outdated protocols may not work well with today's faster networks, leading to slower data transfer.

Configuration Issues:

DNS Resolution: Slow or misconfigured DNS servers can delay domain name resolution, adding to overall latency.

Caching Mechanisms: Inefficient caching strategies, such as inadequate cache sizes or outdated cache content, can lead to increased latency as data must be fetched from slower storage sources.

Operating System and Software Layers:

Operating System Overhead: The efficiency of the operating system in handling network tasks and system calls can affect latency. Systems with high overhead or inefficient handling of network operations may experience higher latency.

Driver Issues: Outdated or poorly optimized network drivers can contribute to increased latency by causing inefficient data handling or processing.

Effects of High Latency on Your Internet Usage

High latency can have several adverse effects on system performance and user experience, particularly in environments that require real-time interactions or quick responses. Here are some of the key effects:

Degraded Real-Time Communication

Video and Voice Calls: High latency in video and voice calls can lead to awkward communication gaps, where participants talk over each other or experience delays in hearing responses. This can make conversations feel unnatural and impair effective communication.

Live Streaming: In live streaming, high latency can cause delays in the broadcast, resulting in out-of-sync audio and video, buffering issues, and a disconnect between the streamer and viewers.

Impact on Online Gaming

In online gaming, high latency can lead to lag, where the actions of a player are delayed, causing desynchronization between players' actions and game events. This can result in an unfair disadvantage and negatively affect the gameplay experience, particularly in fast-paced or competitive games.

Poor User Experience

Delayed Response Times: Delayed response times occur when high latency creates noticeable gaps between user actions and system reactions, making applications seem slow and unresponsive. For instance, in a word processor or during a video game, experiencing a lag between typing and seeing the text appear or between a player's actions and the game's response can be both frustrating and disruptive.

Reduced System Performance

Slow Data Retrieval: In systems where data retrieval is time-sensitive, such as databases or cloud storage, high latency can significantly slow down operations. This affects tasks like loading large files, accessing remote databases, or syncing cloud data.

Delayed Processing: High processing latency in servers or applications can result in slower execution of tasks, impacting the overall throughput and efficiency of systems, particularly in environments like financial trading or scientific computing, where speed is critical.

Increased Error Rates

Network Timeouts: High network latency can cause timeouts in data transmission, where the system assumes the connection has failed and may terminate the session or require a retry. This is common in scenarios where strict timing is crucial, such as online transactions or streaming services.

Packet Loss: High latency can contribute to packet loss, where data packets are lost or dropped during transmission. This can degrade the quality of the service, leading to incomplete downloads, broken video streams, or garbled audio in voice communications.

Business Impact

Decreased Productivity: In a business environment, high latency can reduce productivity by slowing down access to necessary tools and data. For instance, employees may experience delays in accessing cloud-based applications or communicating through collaboration platforms, hindering their efficiency.

Customer Dissatisfaction: For businesses that rely on online services, such as e-commerce websites or customer support systems, high latency can result in slow load times and poor service quality, leading to customer frustration and potential loss of business.

Compromised System Stability

Server Overload: Prolonged high latency can lead to server overload, where systems are unable to handle incoming requests efficiently. This can result in crashes, downtime, or the need for emergency scaling, which can be costly and disruptive.

Tools and Methods for Measuring Latency

Measuring latency is essential for diagnosing performance issues and optimizing systems. Here are some common tools and methods for measuring latency, including ping tests, online tools and software:

Online Latency Testing Tools

Among the various options available, online tools like Speedtest and Fast.com stand out for their simplicity and effectiveness in measuring latency and overall internet performance.

Speedtest by Ookla

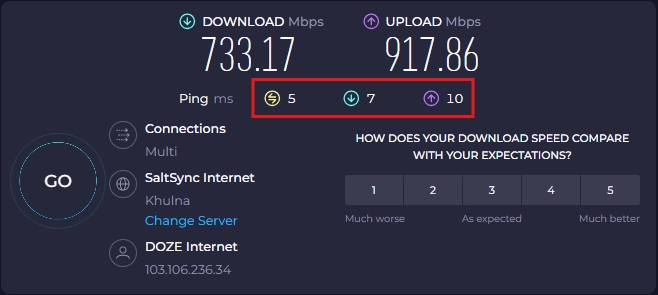

Speedtest is a widely used online tool that measures internet performance. When you initiate a test, Speedtest connects to the nearest server and measures the time it takes for data packets to travel back and forth.

Latency Measurements

Idle Latency: This represents the time taken for packets to travel without any active data transfer. A lower idle latency indicates a more responsive connection, ideally below 20 ms for optimal performance.

Download Latency: This is the latency experienced when data is being downloaded. It can affect how quickly files and content load, with lower values being preferable (under 30 ms is ideal).

Upload Latency: This measures latency during data uploads. High upload latency can cause delays in activities like video calls and cloud uploads, with values ideally below 30 ms.

How Can You Improve Your Latency Issues?

High ping and latency can hinder your online experience, but several strategies can help. Switching to a wired Ethernet connection minimizes disruptions and provides a more stable connection. Optimizing your router’s Quality of Service (QoS) settings can prioritize essential traffic, while keeping your device close to the router reduces weak signals.

Selecting geographically closer servers during online activities can significantly lower latency. Additionally, close unnecessary applications and keep your devices updated to ensure optimal performance. If issues persist, consider upgrading your internet plan or switching to an ISP with advanced technologies like fiber optics.

For more detailed strategies, check out our guide on how to Solve Your Ping and Latency Issues.

Conclusion

Keeping latency under control is key to a seamless digital experience, whether gaming, streaming, or using cloud services. Understanding its causes helps you take targeted actions like upgrading hardware, optimizing your network, or tweaking software.

As demand for faster interactions grows, managing latency becomes even more crucial. By applying the right techniques, you can enjoy a smoother and more responsive online experience, enhancing both speed and satisfaction.